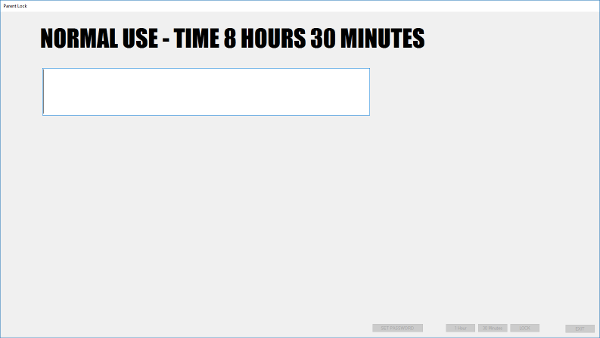

I wrote [an app] to control the kids’ computers with a lock screen after bedtime. The app launches on startup and when locked cannot be killed, closed, minimized, moved, or switched out of. After a password is entered, the lock can be temporarily delayed by 30 minutes or an hour.