Building River Landscape in Unreal

Building Super Detailed Landscape Cinematics in Unreal Engine 5.4

Join Microsoft Dev Essentials

[Microsoft Dev Essentials] provides access to Visual Studio legacy downloads. I.e. VS 2017 Community for UE 4.21.

[Setting Up Visual Studio for Unreal Engine]

Visual Studio 2017 Community – File name: mu_visual_studio_community_2017_version_15.3_x86_x64_11100062.exe

Unity MUSE AI Now In The EDITOR!

Prototype a 2D game level in 20 minutes with Unity Muse | Unite 2023

Image to Video Generation

New Disruptive Microchip Technology and the Death of Memory

How to upload and activate a WordPress plugin ZIP file

Mechwarrior 5 Modding Toolkit

Apple joins OpenAI Board, OpenAI Huge Hack, Skeleton Jailbreak, GPT4ALL 3.0

2D Pixel Art Character Template Asset Pack

Adam Savage Meets Nerdforge’s Martina and Hansi!

Common macros for MSBuild commands and properties

Nvidia PhysX Physics Engine

NVidia [PhysX SDK]

NVidia [PhysX Visual Debugger]

Repository: NVidia [Sample Snippets]

Repository: tgraupmann [Cpp_Physx_Overlap_Snippet]

Unity Particle Pack

Procedural Terrain Painter

Unity WebSockets

Jack Black Character Course

AI News: ChatGPT is in BIG Trouble

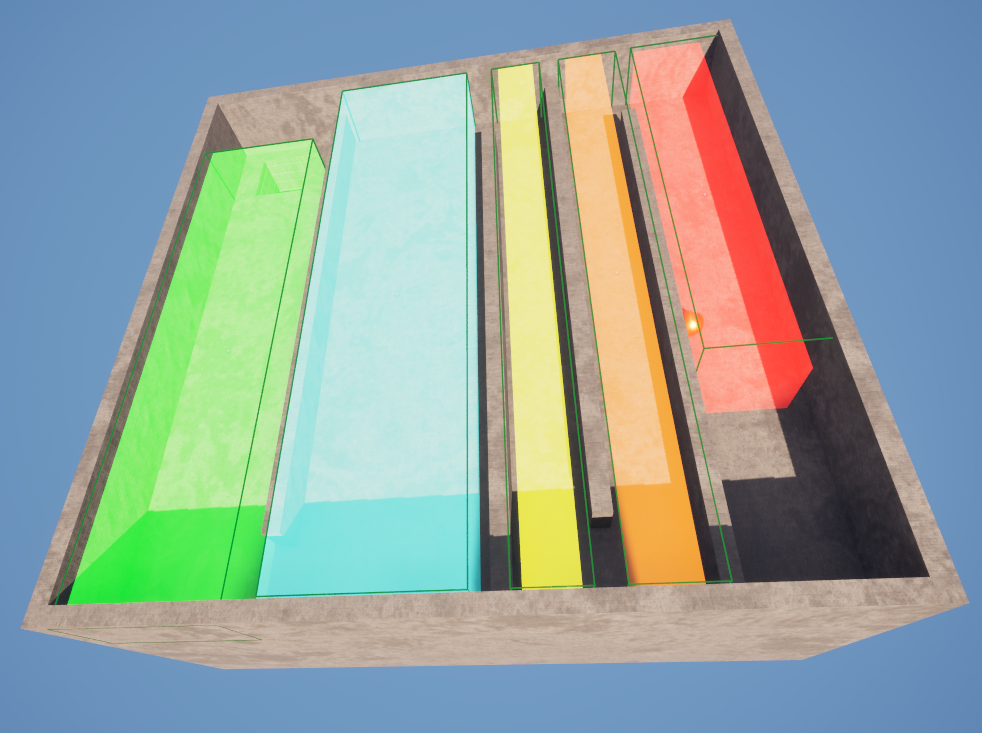

UE Puzzle Game

[UE Puzzle Game] made in UE 5.5 controls RGB lighting and haptics.

Making 1 MILLION Token Context LLaMA 3 (Interview)

Ex-OpenAI Employee Reveals TERRIFYING Future of AI

UE5 Geometry Brushes and Brush Editing Mode Basics

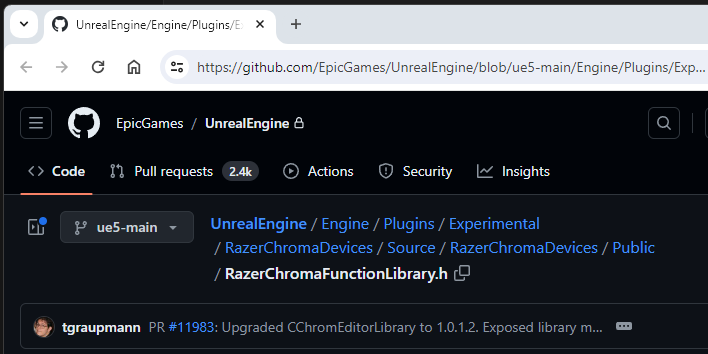

Unreal Engine 5.5 – Chroma Sensa

After a decade of making engine plugins for Unreal, I finally have code that was accepted into the [main UE 5.5 engine code base]. Chroma Sensa controls RGB lighting and haptics. [Pull Request 11983]