- LlamaForCausalLM

- MistralForCausalLM

- RWForCausalLM

- FalconForCausalLM

- GPTNeoXForCausalLM

- GPTBigCodeForCausalLM

[mistral7b_ocr_to_json_v1] Architecture=MistralForCausalLM

[Finetune LLM to convert a receipt image to json or xml]

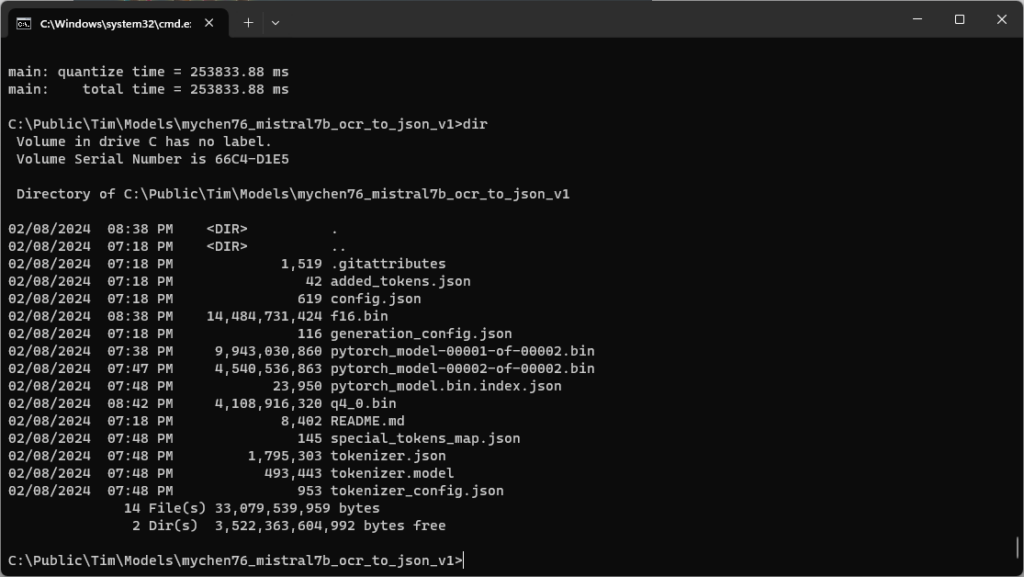

On WSL2:bash <(curl -sSL https://g.bodaay.io/hfd) -h./hfdownloader -s . -m mychen76/mistral7b_ocr_to_json_v1

Windows Command Prompt:docker pull ollama/quantize

After download completes:docker run --rm -v .:/model ollama/quantize -q q4_0 /model

Create a Modelfile text file with no extension:FROM ./q4_0.bin

TEMPLATE [INST] {{ .System }} {{ .Prompt }} [/INST]

Create a folder for the model in the docker container:docker exec -it ollama mkdir /model

Copy all the local files into the container /model folder.docker cp . ollama:/model

Create the model:docker exec -it ollama ollama create mychen76_mistral7b_ocr_to_json_v1 -f /model/Modelfile

Run the model:docker exec -it ollama ollama run mychen76_mistral7b_ocr_to_json_v1

Make any changes to the Modelfile and copy the changes to the container:docker cp Modelfile ollama:/model

Repeat the create model step as needed.